LLMs still can’t answer everything you want them to—better data engineering can help.

If you’re a lawyer and have been following the deluge of AI-related headlines these past few months, you might conclude that “large language models” (LLMs) brought superhuman powers to the entire legal profession overnight.

If you’re a lawyer and have been following the deluge of AI-related headlines these past few months, you might conclude that “large language models” (LLMs) brought superhuman powers to the entire legal profession overnight. In reality, while LLMs have indeed unlocked tremendous value for lawyers, their capabilities can break down quickly when asked questions that stray from their domain of expertise. And, they will deliver a flagrantly erroneous answer with the same trademark confidence as a correct one.

This presents a huge challenge for lawyers as well as those developing practical legal applications on top of LLMs: where do you delineate which types of requests AI can reliably complete, and how do you tackle the more interesting tasks on the other side of the line?

LLMs have a long way to go to solve everything we want them to do – but smarter data engineering can get us there faster. In this post, we’ll outline some of the concerns lawyers – and builders – should consider when applying LLM technology to legal workflows, and provide valuable use cases for applying LLMs to legal work.

Unreliable prompt responses

It’s no coincidence that a stream of new applications has entered the market advertising automated question answering for fact-finding queries like:

- What is the $ amount of X provision?

- Which parties are involved in my transaction?

- What is “material adverse effect”?

Querying for facts with simple queries can be powerful, especially when navigating huge corpora of 100+ page documents. However, it’s easy to steer into dangerous waters, even with seemingly simple questions:

- How often do we refer to X provision?

- What is the total number of preferred stock options across classes?

- What is the most common phrasing for this definition for Client X?

If your documents don’t explicitly state the answers to these questions, in all likelihood an LLM will produce an incorrect response – even if it has all the context a person would need to produce the correct answer.

Now, let’s dive into why…

ChatGPT Can’t Count

You might be asking, “Why does it matter if a legal LLM can do math?”. In the examples below, we’ll outline how LLMs' inability to tackle complex math and abstract reasoning can impact critical prompt requests that lawyers might want to use when drafting contracts.

It’s a well-documented observation that LLMs struggle with complex math and abstract reasoning, to the great amusement of ChatGPT users everywhere. This “misbehavior,” though it can be mitigated somewhat with fine-tuning and building bigger models with more data, is actually a fundamental feature of how these models operate.

A language model’s specialty is, unsurprisingly, language. The recent wave of LLMs (made famous by ChatGPT) excel in mapping the complex chain of dependencies which form the building blocks of syntax and semantic relationships in a given text. However, as with most deep-learning models, there is no explicit stratification of syntax, semantics, facts, or cultural nuance, so relationships inferred by the model are irrevocably intertwined with the observations it finds in its training data.

As a result, it can guess that “pool” is a likely continuation of the sentence “I went swimming in the ___” because a pool is all of the following:

- a noun (as opposed to “eats”)

- bigger than a person (as opposed to a “toaster”)

- a liquid-y thing you can swim in (as opposed to a “nail salon”)

But the LLM never explicitly looks first for nouns, then big nouns, then big, liquidy nouns the way a person might guess in a game of “20 Questions.” The output is the result of the many hundreds of billions of calculations, each potentially capturing some element of these expectations, to output a set of probabilities for which “pool” is given the highest score, with similar words such as “lake,” or “water” scoring highly as well.

Generative models are first built on billions (even trillions) of completions like this, giving them a detailed understanding of language and even raw facts about the world. Similarly to how people learn to detect patterns from repeated examples with slight variation, generative models notice that “pool” and “lake” get used in similar circumstances, so they probably share common attributes. The same model might also notice, from math problems in its training data, that adding two numbers ending with the digit “5” results in a number ending with “0.” Basic arithmetic becomes a syntactic relationship like any other.

Once you start adding more than a handful of 3-digit numbers together, however, the number of syntactic relationships between all the digits that must be accounted for with exact precision overwhelms even GPT-4’s appetite for complexity.

The same behavior shows up when an LLM is asked to count, although for a slightly different reason. Depending on the complexity of what you ask it to count, more than 7 occurrences might as well be 5 or 8 for all GPT-4 cares. The inner workings of the model may show that each occurrence is accounted for and contributing to the output probabilities, but at what point should the probability that the first output is “9” suddenly shift back to “1”, followed by “0”? This requires extreme levels of precision completely atypical of linguistic relationships, precision which even minor complications can disrupt.

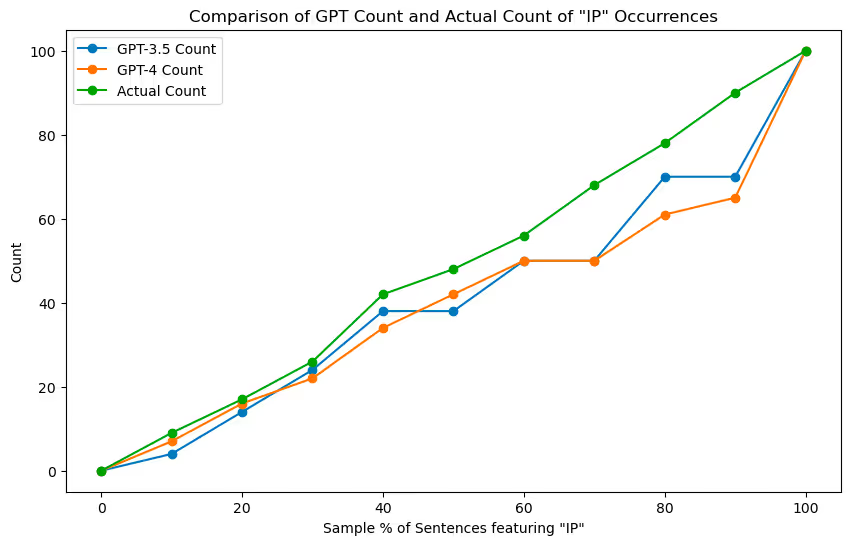

For instance, if you give ChatGPT a set of 100 random sentences containing a reference to any of “intellectual property,” “material adverse effect,” or “amendments,” and ask it to count occurrences of “intellectual property,” both GPT-3.5 and GPT-4 tend to undershoot by a significant margin:

In many cases, GPT-4 performs even worse than GPT-3.5. Both benefit from the simplification provided on the two extremes: either none of the sentences feature IP, or all of them do.

A common legal question in the realm of contract drafting like “What type of carve-outs are usually included in definition X for this client?” is even more complex than this experiment. In this case, the LLM has to juggle at least three tasks simultaneously:

- Identify text strings corresponding to individual carve-outs

- Group similar language together across examples (likely paraphrasing some of them)

- Count the groups and assess what qualifies as “usually”

Relying on an LLM alone to answer it could result in a completely arbitrary answer.

Teaching LLMs to count

The poor performance of generative models on counting and arithmetic can be mitigated in a few ways:

- Fine-tune on more counting and arithmetic examples

- Ask them to show their work

- Ask them to generate code that solves the problem and run that instead

Considering that [1] results in the creation of the world’s most expensive unreliable calculator (there may be an antique abacus lying around worth a few million dollars - but it would still be more reliable than fine-tuned GPT-4), we can consider it a fascinating but essentially academic endeavor. There have been some very interesting work on fine-tuning models for mathematical and logical word problems, however.

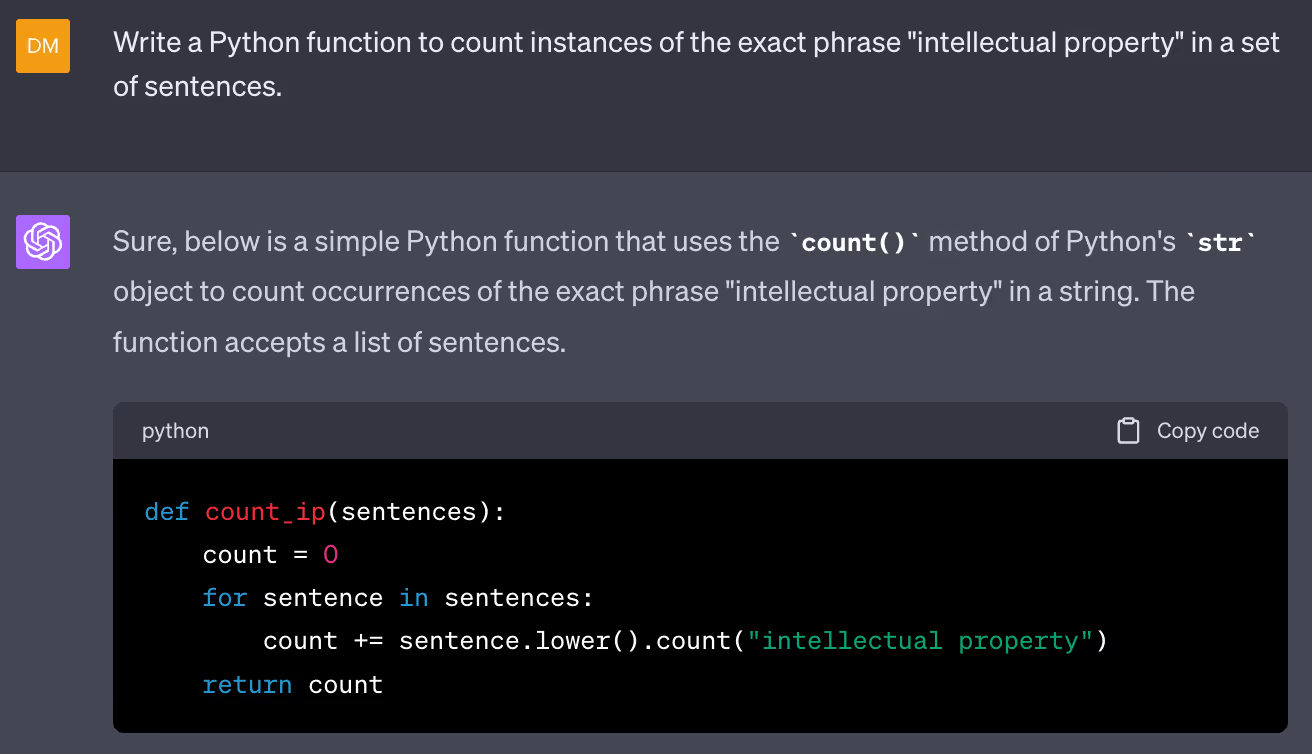

Option [2] attempts to address the fundamental weaknesses of LLMs with their strengths and has been shown to deliver big improvements in accuracy. For instance, if we rephrase the above prompt by asking first to list (and number) the appearances of “Intellectual Property,” and only then to answer how many appearances there are, GPT-4 will deliver the correct answer. The subtasks used to break down the larger task are all much simpler for an LLM, and performing them in sequence allows the model to use its first outputs as inputs for later tasks:

- Identify references that match a query or term

- Keep track of a counter that increases by 1 with each match

- Report the last iteration of the counter

This is probably what a person without a computer (or abacus) would do faced with the same question, and the main disadvantage is that it is significantly (in this case, 100x) slower.

But what if we… gave the LLM a computer?

Option [3] may at first seem counterintuitive if we measure LLMs’ intelligence on the same scale as that of a human: how can you trust a program with the counting abilities of a 2-year-old to write Python code (let alone for a legal application)? And who would be bonkers enough to execute the code, given the poorly understood vulnerabilities of LLMs to prompt injection attacks?

Luckily, computer programming is not too dissimilar to other paraphrasing and translation tasks that LLMs were designed for from the very beginning: take instructions written in English, and translate them to Python. Code generation has now become one of the primary advertised uses for LLMs, and AI researchers have found that the “complex reasoning” capabilities of LLMs are closely related to their exposure to coding examples in the training process.

Given that LLMs can code and even interpret error messages fairly well, many frameworks have been developed to create autonomous AI Agents that break down complex tasks, search the web and documents for information, and even execute and iterate on their own code.

As mentioned in this excellent post from Honeycomb on lessons from building an LLM-backed application, the problem with these frameworks for practical applications is that LLM outputs aren’t guaranteed to be perfect on the first go, and running through several iterations of prompting and code execution is likely to compound those errors. And high-performing commercial LLMs like GPT-4 are already slow: iterative runs could worsen this by orders of magnitude.

Engineering is Still the Answer

LLMs may not be the all-powerful legal assistants the AI hype-generators have conjured up, but that doesn’t prevent engineers from taking advantage of their indisputable strengths while building around their deficiencies. By anticipating the types of valuable questions lawyers will ask of these systems, applications can work off of a data model that carries the burden of counting and aggregating – and pre-compute common intermediary tasks to remove additional complexity from the LLM prompt.

.png)

.png)

.png)